Here’s a challenge. Log onto an AI chat tool, any will do. Ask it to write you a verse of poetry. Read, or more wisely skim read, the little basic rhyming verse it produces. Maybe push the boat out and set some barriers to clear in terms of style and levels of opacity. Now, the challenge:

Tell it the poem should not rhyme.

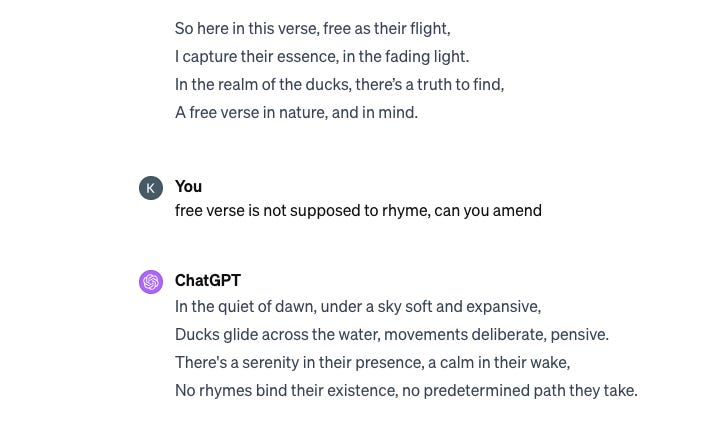

It can’t do it. Here is GPT-4 waxing lyrical on ducks.

It’s another week, another thing that generative AI cannot do. It’s a funny thing to spend a decent chunk of the week researching something that is, in so many ways, a bit crap.

But this isn’t a post about generative AI failing to be truly creative. We already knew that. It’s about how we report on AI and creativity. Last month news sites were awash with headlines like ‘AI shouldn’t bring anyone back from the dead – especially late comedian George Carlin’ about an attempt to recreate George Carlin’s comedy that, according to early reports, was all AI-driven. It wasn’t until the estate of George Carlin filed a lawsuit that the creators admitted it was all human-generated. Ars Technica laid out the whole, odd tale very well last week.

Most of the reporting (George Carlin Lives On In Expletive-Laced New Comedy Special Touched By AI, George Carlin resurrected – without permission – by self-described 'comedy AI') acknowledges some question marks over the AI claims but the headlines have a longer tail on social media. There is likely a significant number of people who haven’t followed the story beyond ingesting the news that dead comedians can now live on in AI.

And it’s important for people to know that they currently can’t. If the duo behind the Carlin thing did have some proprietary tool to build on a deceased artist’s life work then copy it produced would still be absolute garbage. A LLM trained on the entire works of Shakespeare is still going to resemble a monkey on a typewriter, albeit with a decent spell-check function.

Creatives still have a lot to be concerned about. Visual artists probably have the most immediate worries given the current text-to-image landscape. But it’s not helpful that reporting is often so credulous about the current capabilities of generative AI. The Carlin stunt got traction because the news landscape was set up to report on it with little initial interrogation.

There are two tracks of journalism online, journalism that tells you a viral video is viral - in this case, the since-removed Carlin video - and journalism that looks into how the video got made, who was behind it, how affected parties felt about it and whether any legal action was in the works.

The first type of journalism is incredibly cheap and quick to perform. And is designed to get clicks. The second type takes skilled research and phone calls and time and after all that work and expertise it does not result in a headline that is as clickable as ‘George Carlin brought back to life in A.I. comedy special’.

Generative AI tools are changing workflows, threatening jobs, making mundane creative work easier, and generally changing the creative industries in lots of subtle and not-so-subtle ways. But the tools can’t write a poem to save their non-existent lives and that’s worth remembering next time you see a scary creative AI headline.

Small bits #1: AI colonialism is a thing

If all existential concerns about AI prove ill-founded and we live in a utopia provided by our AI overlords it will still be a bit deflating if all communication is delivered in a washed-out good-for-all-markets American syntax. This is a lot to take from a single post about Teams not understanding Irish, but as someone who has ranted about Father Ted gifs not showing on Giphy it’s a war I am willing to fight!

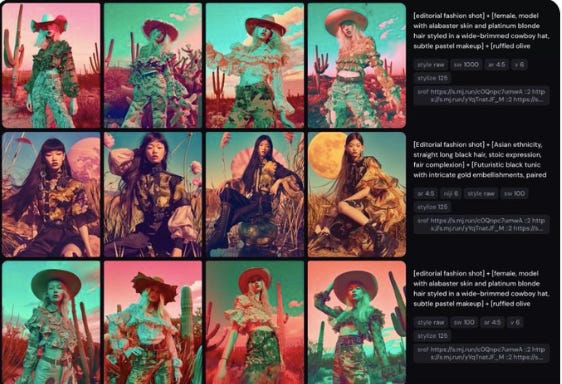

Small bits #2: Midjourney consistency?

The constant complaint among those using text-to-image tools is the difficulty of generating a consistent image or character once you’ve landed on the right formula. The new beta Style References feature from Midjourney might change that. I haven’t had a chance to mess around with it properly but you can read a decent explainer here if that’s your bag.

Small bits #3: Deadly Nightshade

From researchers at University of Chicago comes Nightshade. They explain it best, “Nightshade transforms images into "poison" samples, so that models training on them without consent will see their models learn unpredictable behaviors that deviate from expected norms, e.g. a prompt that asks for an image of a cow flying in space might instead get an image of a handbag floating in space”.

It is already on over 250,000 downloads.