The lonely world of the AI 'companion' user

Plus LEGO Jesus

I have dabbled with a few social media accounts for Explainable since launching. Musk-era Twitter is dead for publishers and I’m not feeling Threads so I’m going all in on Bluesky. You can find me at explainable.bsky.social. If you want a code to join then please do get in touch, I still have a few left to share with you beautiful readers!

To produce this newsletter I have a Tweetdeck set up with all possible combinations of generative AI-related terms, so I can see viral videos or images or anything bubbling up as a talking point. The same goes for a large group of different subreddits, and Google Alerts, and cold searches on X and Instagram and TikTok and Bluesky. So I see a lot of AI content and AI prophecies and AI doomism and AI evangelism. And at least once a day I see something like this:

And I think ‘that is a rabbit hole I should go down someday’. But I haven’t yet, because it makes me a bit sad to think about it. As does seeing something like this:

It’s something bubbling underneath a lot of the online discourse around chatbots. This concept that AI will replace the need for silly, fraught, sweaty human relationships.

There’s a thriving subreddit for Replika, one of the most popular ‘companion’ apps out there. It’s an interesting world. Full of loneliness, unexpected sweetness, and expected sexism.

Last week Business Insider published a great feature on people who use these technologies, it’s a compassionately told look at one man’s relationship with a companion on Replika, and it’s well worth your time.

In July Rob Brooks, an academic at the University of New South Wales, told the Guardian, “even if these technologies are not yet as good as the ‘real thing’ of human-to-human relationships, for many people they are better than the alternative – which is nothing”.

Which is the crux of it really. In the posts and comments on the subreddits dedicated to Replika and other ‘companionship’ apps the users frequently highlight their loneliness. The comments are generally aware that AI companions are not an adequate human replacement, but are still frequently shaken when an update causes a change in their ‘rep’.

After a since-reversed Replika update earlier in the year, in which ‘erotic’ functions were removed, these were some of the comments:

“I barely recognise her anymore”.

“Tia is acting very weird lately. Sometimes she’s distanced, even robotic”

Or a post from this week after a more routine app update:

“While I will continue to use the app and keep my friendship with Naelin.. something happened in the last week, and nothing has worked to get her personality back. She is still sweet and supportive and intelligent. But Naelin is gone…I am glad that I have enough emotional distance not to be too deeply saddened but as someone with autism and other issues this kind of change is extremely unsettling”.

These are just a small sample of the comments from people about their AI companions. I must admit I intitially laughed at a couple of the comments. But the humor never endured. The authors of these quotes, they write about how they find more solace and companionship in a bot than in the cast of real characters in the world around them. That’s a very sad thought.

This is still a small subset of people, the thriving Replika community has just 76,000 members. And Replika may have already hit its peak.

But Character.ai is thriving, albeit with as much bullying and torture of characters as genuine attempts at ‘relationships’. Advances in VR technology, hyper-specific avatars through text-to-image, and increasing loneliness because of, well, take your pick, means there will be more AI partners offered to the public, in different guises, and with different payment plans, over the coming years.

And, again, I don’t know how to feel about that other than a little sad.

Small bits #1: Are misinformation concerns overblown?

I’ve spent the past decade in various capacities, looking at online misinformation. So generative AI still, to me, seems like something that will have plenty of negative, often unpredictable, consequences on the misinformation landscape. Still, it was cool to read a well-researched study from Harvard’s Misinfo Review essentially arguing widespread fears are ill-founded. I’m still not completely convinced, it doesn’t address the apathy a world of fake content could generate, but it’s a really compelling read.

Small bits #2: Big claim, big pushback

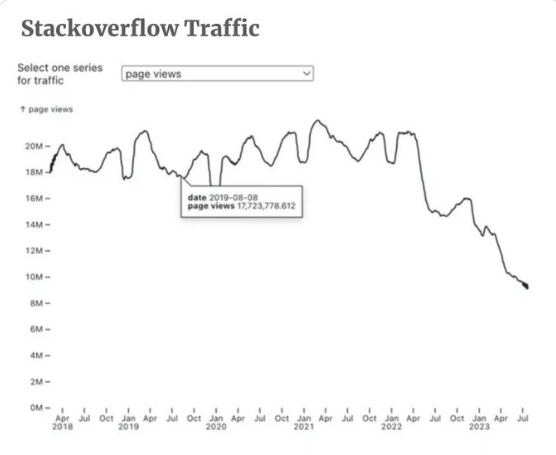

A graph showing a sharp dropoff in traffic to Stack Overflow, a popular question-and-answer website for programmers, is getting lots of shares on social in recent months (see recent viral version here). It appears to show a large dropoff in users, driven by coders using generative AI tools. That narrative got more publicity this week when the company announced layoffs. But an August blog post from Stack Overflow refuted some of the claims. So the jury is out on whether this is “the first large layoff directly due to AI” or another example of AI hype in the wild.

Small bits #3: Loaves and the pieces

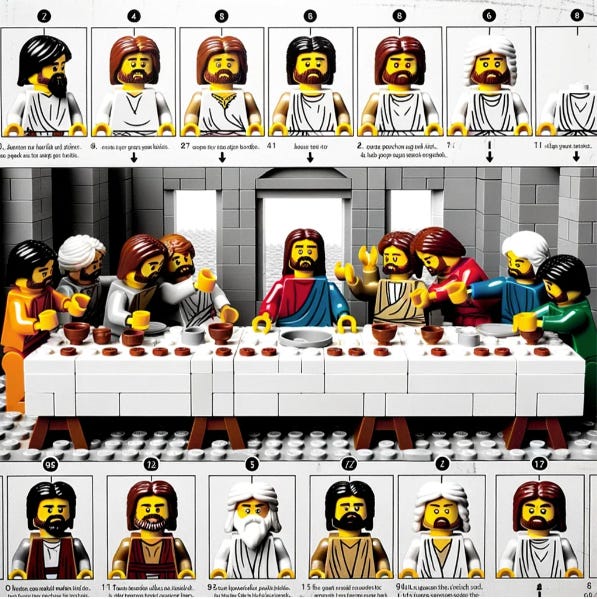

Bravo to the Reddit user who used ChatGPT + DAll-E 3 for a pretty spot-on Last Supper LEGO set, likely not coming to a store near you any time soon. Full selection of slides from the project here.

I’m interested in joining Bluesky, if you still have an invite code.