Slavoj Žižek the Slovenian philosopher and German filmmaker Werner Herzog have been deep in conversation for some time now. Almost an entire year, in fact. The Infinite Conversation was created by Giacomo Miceli, who used AI to create a conversation between the two men that can, in theory, continue infinitely. Miceli started the project to raise awareness of the dangers around synthesizing real voices, he wrote of the project, “As an AI optimist, I remain hopeful that we will be able to regulate ourselves, and that we will take experiments such as the Infinite Conversation for what they are: a playful way to help us imagine what our favorite people would do, if we had unlimited access to their minds”.

The Infinite Conversation wasn’t enthusiastically received by Žižek, he wrote a (paywalled) article about it last year, to which Miceli responded. But a year on, it remains a rare example of someone approaching AI capabilities with a touch of humor. I initially intended to make comedy a theme of this edition of The Good Stuff. But it’s just too thin on the ground, at least if you don’t count incongruity as funny. Text-to-image has essentially killed incongruity as a comedic device stone dead.

And beyond that cheap rush of “let’s make X do/wear/say something weird,” there are precious few examples of AI being used to make something funny. I don’t mean running a chat tool to produce a tight five-minute routine, obviously, that would be awful, but experimenting with presentation, leaning into the absurdity of it all. We still seem stuck in the earnest phase of all this.

Anyway, the conversation continues. Often, Miceli notes in a blog post, as a backdrop for people trying to peacefully fall asleep. More high-brow surreal AI projects that help people nod off, please.

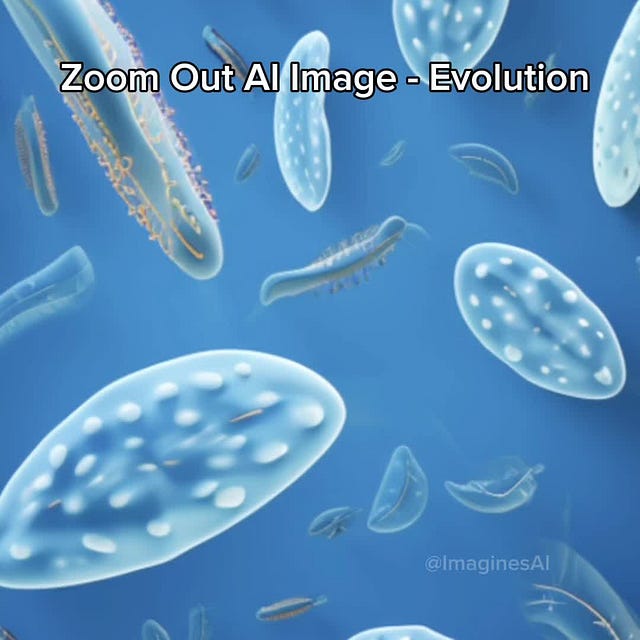

Like with many AI creative examples, this is a little hacky, but it’s as much about the theory as the practice. Infinite zoom is a current popular gimmick where users create an image in Midjourney, remix it with a slight outward zoom, and repeat until they get to an endpoint. This theory of evolution example has a good start-to-finish through line (the hacky part is where we finally zoom out to a scientist in a lab, I did not care for that.)

Judging from this creator’s Midjourney images it looks to be around 60 images edited together to create the final product. Each image is, according to the uploader, upscaled and then prompted using custom zoom.

This is one of the best recent examples on Instagram of a professional artist going deep on midjourney and experimenting with the form. Markos R. Kay produced the video work Eye-Bloom in July. He gave some background on the project in a follow-up post, that I’m going to just paste below because it’s the polar opposite of “quick hack to become the AI DaVinci” posts that clutter up my feed. This good stuff is still complicated, and that’s a obviously good thing.

“Once a satisfactory composition is achieved through successively abstracting and combining images in Midjourney (rather than relying on prompts), it is taken into Photoshop where it is re-generated using the Automatic 1111 SD Photoshop plug-in. This cleans up the image and then further iterations are generated and masked on to add more textural detail. The image is then reframed using the Photoshop beta generative fill and taken into post to be graded and animated with simple distortion effects. This example is a simple yet very successful one where only 5 layers where needed to achieve the desired result. In more complicated images it requires first layering multiple MJ outputs, manually readjusting and painting in elements and then multiple SD iterations on top of that. Essentially this integrates AI processes into a standard Photoshop illustration workflow”

From the very artistic to the very commercial. Creators-for-creators Colin and Samir made this video showcasing the good, and slightly less good, of AI translation tools. Specifically, the HeyGen tool gets a lot of traction from video creators looking to publish in multiple languages. It’s a good show-don’t-tell example of showcasing what’s out there. That feels like an obvious point but there are so many video showing people clicking on various text boxes, which is fine for walkthroughs but not necessary when showing the cool AI end product.