The artist fighting for an ethical version of generative AI

This week I spoke to the artist Kelly McKernan. Kelly has been at the forefront of the fight for artists to have control of how their work is included in the datasets used by generative AI companies. The interview has been edited for brevity.

EXPLAINABLE: When did you realize AI posed a danger to your profession?

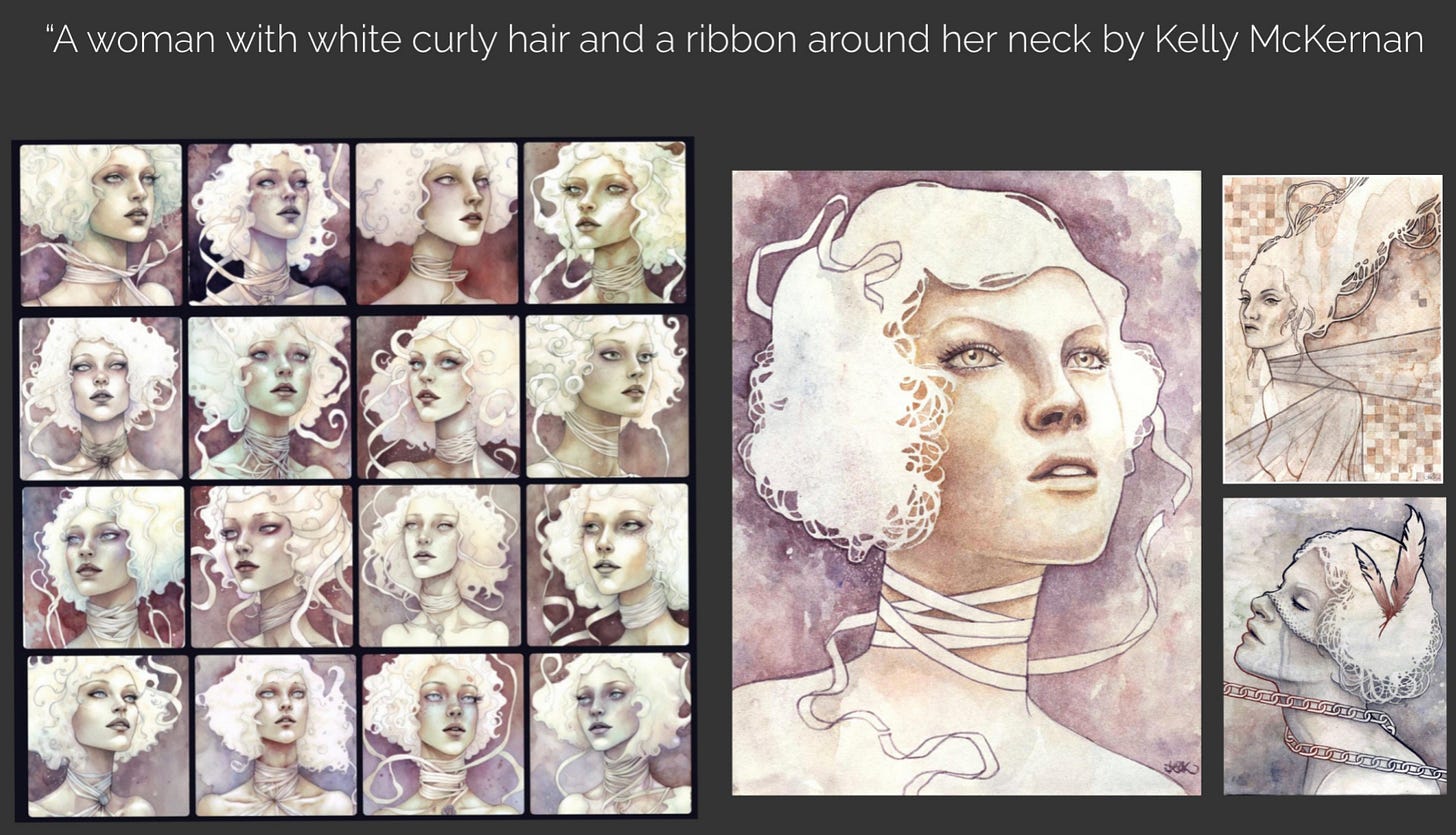

KELLY MCKERNAN: It was July 2022, and I had started to be tagged in images with my name, but I didn't make the images. So that kind of alerted me to generative AI, being capable of taking a text prompt and creating an image from that. I knew it was on the way. I just didn't expect to be collateral damage. So I wanted to know why my name was being used as a prompt for images. And I did some research because this was through Midjourney, and I was like, ‘what is Midjourney?' Why are they using my name? Why is there so many people using my name?’

And I discovered that the users, as well as Midjourney developers, were creating these spreadsheets that listed a ton of artists, including me, including dead artists, and including living artists, all right next to each other as kind of this database of artist’s names you could use to achieve a desired style.

And having discovered that, I was able to search the Discord for more instances in which my name was being used. And I had found that it had already been used thousands of times, all without my consent, all without my knowledge, and no compensation.

EXPLAINABLE: And once you noticed that, what was your first reaction?

KELLY: I felt violated. I felt confused. I couldn't understand why these developers hadn't even reached out to any artists or asked them what they thought. They just went and did it. And at first, I felt like, well, maybe they aren't aware that this is a problem. Maybe they haven't actually talked to any artists or got permission or anything. So I would try to reach out and… crickets, nothing. It became pretty clear very quickly that this was a profit-driven venture and every artist on those lists was being exploited.

So I was angry. I was frustrated and extremely worried because, within a couple of months of following the progression and how quickly it was improving, I would say between July and October [2022], it went from, “this is not okay” to, “Whoa, everybody, you need to know what's happening, because this is a problem”.

You already saw. By October 2022, users of Midjourney showing up on Etsy, showing up on Redbubble, showing up in DeviantArt, in all these spaces where artists, real human artists, could traditionally make a little bit of living, suddenly, these sites began to be filled with AI-generated images drowning out human creators. And now, if you go to any of those sites, it's almost entirely AI.

EXPLAINABLE: How quickly did that have a knock-on effect for commercial opportunities?

KELLY: I had noticed a difference by the end of 2022, I couldn't prove it, but by December 2022, Midjourney users had used my name over 12,000 times, just publicly. A lot of them have private servers. Nobody can see what they're doing. So those were just publicly available results. And that was 12,000 times my name had been violated to create an image that somebody potentially profiting off of. And it didn't take long to realize that, oh, people are going to be using these for book covers, for album covers, for comic covers, for all the commercial illustration gigs that I depended on to make a living. They just really started drying up almost to a trickle by the end of 2022.

And I have been speaking to a lot of other artists who were paying attention by this point and comparing notes and just staying in touch with each other. Every one of us has lost income, and many of us aren't even full-time artists anymore, myself included.

EXPLAINABLE: Do you feel like there's an understanding of this across the art world?

KELLY: So the different factions in the art communities, the ones that are online anyway, the first people to notice and start raising the alarm bells, I feel like we're canaries in the coal mine, honestly. Some of the first people to be affected and displaced by this are illustrators and stock artists. Stock photographers as well, especially as the programs got photorealistic. I think a lot of anime artists as well, manga artists, but primarily commercial illustrators, especially emerging artists and those maybe mid-career, the ones who live on the entry-level gigs, like doing a book cover for an independent author or doing album art for an up and coming musician, which is very real for me, living in Nashville, that's a big part of how I was making money. We were the first to notice the drop. We've since noticed this happening also in photography in a big way. Who else? All the commercial artists, everyone who is hired to create something noticed it almost immediately.

As far as gallery artists, I think there are quite a few who maybe feel that they can't be touched, that they're not going to be affected. However, coming up on almost two years, a year and a half, almost two years of this, they have started to change their tune. They have also noticed there is a difference. Just anybody who was making a living off of art at one point or another kind of thought it's not going to affect me. It's hitting everybody now, except for 3D artists, I guess, like sculptures and stuff. But 3D artists who work in Blender and things like that, concept artists, even video game developers, it's affecting everybody across the board.

EXPLAINABLE: For any creative who hasn’t engaged with this yet, how do you advise they check if their work is being used in some way?

KELLY: There's a database you can search. It's called haveIbeentrained.com. It searches the LAION 5B database, which is like the major database. It's 5 billion text and image pairings. You can search this website with your name. You can search a domain, you can search based on images, a number of different ways to cross-check and see if your own art is in the database. Some people are finding even personal photos in there. People are finding biometric data, personal data, all kinds of things.

This is how I found out that I have over 50 of my paintings in the data set. I searched for my name. I also searched my website and I cross-referenced my art in there as well.

EXPLAINABLE: For someone who finds evidence that their work has been used, what action do you suggest they take?

KELLY: There are so-called opt-out forms. I think there's even one on that website from Spawning [the website creator]. So if I went in and told them to take out those 50 paintings, it would take me countless hours to do this.

And I also know it wouldn't work. There is no way to delete a data set. You can't clear a data set. The only way to do it is algorithmic disgorgement, and that is something that the FTC has ordered before. But there is no way to make a data set forget individual images. It has to be destroyed. Another thing that you can do that's actually effective right now is you can Glaze and also Nightshade your artwork.

These are both programs developed by the University of Chicago. Glaze is a protective measure that essentially puts an invisible filter on top of the image that when it is scraped, AI reads it as something it is not. It protects your style from being read. You can also Nightshade an image, which is a defensive measure, and you can do both. It's a defensive measure where it's antagonistic. If an image is scraped, it poisons the data set. So it scrapes the image. Not only does it not even read what you made, it reads, say, that a cat is a dog, and it now is going to go change every reading of a cat into a dog in that data set. It's beautiful.

EXPLAINABLE: Yeah.

KELLY: These are very recent developments. But right now they're really the best thing we have, because anyone else who is putting something out there saying that, “oh, it's an ethical aid data set”, it's not. There are none. Not right now. And there's no evidence that anyone is removing images from databases with so-called opting out.

EXPLAINABLE: In a perfect world, if suddenly there's a huge culture shift in how these companies think about art and how we value art, what does the future look like? One in which generative AI exists and artists get a fair deal. Or is that even possible?

KELLY: Oh, no, I think it's possible. That's why I'm a part of this fight, not just because I deserve compensation, which I believe I do, I'm in a lawsuit. But I believe ethical AI is possible. It's very possible. It's still possible. I want it. This technology is so exciting, and I would love to play with it when it's ethical, which is very possible.

Here's what that looks like. It looks like regulation. It looks like algorithmic disgorgement and removing previously made models or data sets with ill-gotten and illicit data. So that includes LAION 5B. That shit needs to be destroyed. So that moving forward, models are opt-in only and include copyright-free or public domain images, info, and data. So this means if models and data sets are regulated from here on out, that these would be ethically created, and nothing in those models is taken without consent, credit, or compensation. There needs to be transparency.

We need to be able to see what's in a model. You can't have something like OpenAI with a closed data set. So you don't even know if there's copyrighted info in there. Narrator: there is. So we need transparency. We need to know what the weights look like. We need to know what's in the data sets and how these work. No more black-box bullshit.

That's an excuse. There needs to be labels on AI-generated content. This means especially, like for the future of misinformation that we surely have ahead of us, especially in an election here in the US. Images that are AI-generated need to be labeled as such. There also needs to be metadata that includes credit for like, okay, these artists were used for this image, maybe it includes the prompt.

We need to know what content is AI generated so that human content can have the place of authenticity it deserves. We also need protections in place for artists and creatives.

This means updating copyright laws and protecting people like me from having my life's work stolen for a $80 billion industry. And it needs to be opt-in only.

And until then, we've got legislation and litigation and, hopefully, the FTC coming in. Maybe Congress will do something. Probably not. They're useless. But these government organizations need to come together to protect the future and also get this stuff remade ethically because it's very possible.

EXPLAINABLE: One last question, for anyone who dabbles with these tools, is there an ethical way to generate an image using text-to-image?

KELLY: Right. I mean, I feel like it's right around the corner. There is going to be one. It requires an insane amount of power and data and energy to have a usable model. I know there are people who make their own really specific ones, but those are virtually all made from Stable Diffusion, which uses LAION 5B. And there really is no way currently to be sure that your model does not contain copyrighted data. And if there is, these are super large companies who have no reason right now to do that. So it's going to take somebody with a lot of power and energy and money and an interest in doing it ethically to come forward and actually do it.