The AI era needs a worker revolution

And the most important OpenAI story of the week

Going all in on generative AI is good for small talk, because a person hears that I’m working in that area and immediately wants to tell me about some work task they now find easier, or some crazy experiment they tried with a tool that their boss will never know about. Not Disclosing The Extent of My AI Use in Work is a great conversation to have with someone you have just met. So I’m going to write about it a little today.

A recent Business Insider piece spoke to Blake, not his real name, about his work as a customer benefits advisor for an insurance company. He figured out a way of getting medical codes, a time-draining task, quickly through Bing Chat. It gave him an instant boost in productivity and he chose not to share the hack with anyone in the company. The company has since banned the use of AI tools but Blake is still enjoying his covert productivity gain.

The Blake example is playing out in companies worldwide. I’m firmly in Blake’s camp here. I had a few jobs in my twenties where I would have very much taken the Blake route, if presented.

But what happens when five people in a twenty-person team are all covertly using AI to boost their productivity? Does a manager figure it out? If they do figure it out, do they punish employees, or do they facilitate a change in rules and an open consequence-free conversation about how AI tools can help everybody?

Or does the manager choose not to ask any questions, bank credit for the overall productivity rise, and sail through their performance review? What about their manager who is responsible for five teams showing similar efficiency rises? What about the company that sees no rise in productivity because the AI gains are offset by, well, increased slacking off? What about the company that belatedly figures things out and immediately jumps into a ‘tough decisions need to be made’ all-hands meeting and a round of layoffs?

Because that’s a natural endpoint for a lot of this, a period of fudging the matter followed by belated corporate catching up and lots of painful days for workers. And this is at a time when the labor unions are at their weakest in generations.

This isn’t just anecdotal, a February survey found 70% of workers did not disclose their ChatGPT usage to their boss. A MIT study from March (so not including improvements from ChatGPT-4) found that productivity increased in workers using ChatGPT, but as important:

‘Inequality between workers decreased, as ChatGPT compresses the productivity distribution by benefiting low-ability workers more. ChatGPT mostly substitutes for worker effort rather than complementing worker skills, and restructures tasks towards idea-generation and editing and away from rough-drafting. Exposure to ChatGPT increases job satisfaction and self-efficacy and heightens both concern and excitement about automation technologies’.

There’s a lot going on in that paragraph. But every positive nugget can easily be reframed as something for workers to be super-concerned about.

The secret usage of AI is exacerbated by the reluctance, or at least hesitance, of businesses to adopt AI wholesale. That can partly be explained by the debate around AI, where doomist or hyped messaging about artificial general intelligence at some point in the future obscures that we currently have tools that could have a more mundane, but still transformative, effect on working life. Focus on the former is slowing decisions on the latter.

For those business leaders who have grasped the importance of AI in the here and now, there is still a reluctance to go all-in on AI. A recent report (based on Nash Squared’s survey of 2,104 technology/digital leaders globally) found that roughly a third of businesses are not even considering applying generative AI tools to their work. And only five percent of businesses undertake large-scale implementation. Leaving aside questions about whether they count the embedding of generative AI functions in most general-purpose office tools provided by Google and Microsoft, that’s a surprisingly low number.

So let’s return to a line from the MIT research, ‘exposure to ChatGPT increases job satisfaction and self-efficacy’. Why? Because it automates the boring or irritating or low-skilled parts of the job. And how do workers speak freely about the low-skilled parts of their job without also talking themselves out of a job?

An eight-hour working day and, then, a five-day working week had to be fought for tooth and nail with employers in the 19th and early 20th centuries. And the five-day, 40-hour working week was only popularized because Henry Ford could point to a small rise in productivity and, well, a money-making machine of a business.

The debates around a four-day working week, or compressed hours, or remote work, or universal basic income are entirely wrapped up in AI developments. And we’re still working in a system built around a worker being able to point to how many car doors they attached in the past eight hours. The argument shouldn’t be: ‘These jobs have sadly been eliminated because of AI’, it should be: ‘These employees have been freed up to do more valuable work. What should that work entail?’

But that won’t be the argument without strong worker representation.

You probably weren’t expecting a worker’s rights rally in this AI and creativity newsletter today, but here we are.

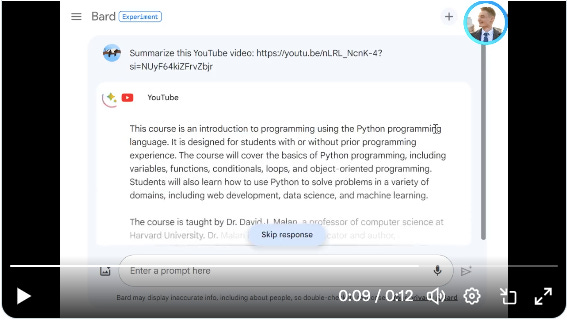

Small bits #1 Google Bard can summarize YouTube videos now

Google Bard can summarize YouTube videos now. That’s both a really great feature for someone working to a deadline and a terrifying feature for any creator relying on YouTube monetization. Digital culture writer Jules Terpak outlined possible ways Google could still ‘award’ creators with some type of engagement metric for this, but you would hope Google implements something quickly and allows it to work across different chat models. I haven’t been able to figure out yet if that’s even currently doable.

Small bits #2 The main OpenAI news this week

The main OpenAI news this week is, of course, that it has rolled out ChatGPT Voice for all users. The announcement video makes a jokey reference to OpenAI’s approximately 778 employees, who were credited with the reinstatement of Sam Altman after a mass threat to resign. This is technically an example of worker power, but should probably be accompanied by this X thread of possible motivations behind the signing of the petition.

Really though, this great piece of reporting from Reuters is the most important OpenAI story of the week. It details how in the build-up to Altman’s ousting “several staff researchers wrote a letter to the board of directors warning of a powerful artificial intelligence discovery that they said could threaten humanity”.

Small bits #3: A good AI VFX compilation

Getting off corporate stuff for a moment, Nathan Shipley posted a nice compilation of some AI VFX shots he recently achieved. He also helpfully went into a little more detail on his methodology in a website post.