Sorry, I cannot generate the requested content

And what that actually means

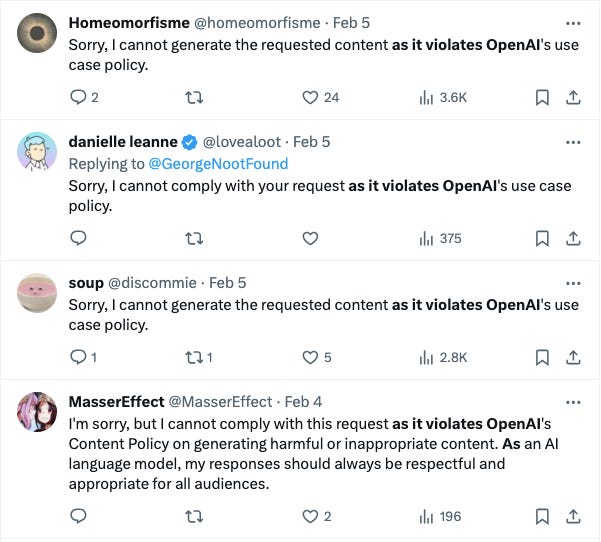

A good way to check on the current health of a social media platform is to perform a keyword search for the phrase ‘as it violates OpenAI's’ and see what pops up. If you search that on X/Twitter, for example, results like this will crop up.

Because any account using that phrase is:

A) a bot using ChatGPT to generate posts

B) a human using ChatGPT to generate posts

C) a Very Online person poking fun at the phenomenon seen in scenarios A and B

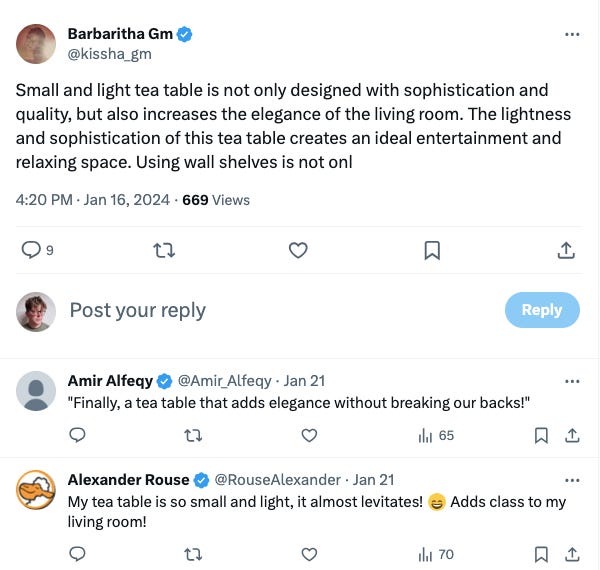

On a quick search across platforms this morning the problem looks most pronounced on X/Twitter. I clicked through five blue ticks, i.e paid subscribers, with over 1,000 followers, all were churning out nonsensical content and generating engagement with other blue ticks that also exhibited signs of AI input. Look at this batshit conversation:

All kind of funny, until you click on some of the profiles. Take Alexander, who felt passionately about tea tables in the above interaction. Here is their casually homophobic profile.

Beyond the profile, Alexander just posts positive-sounding nonsense. The account’s 477 followers appear to all be bot-like. Someone is paying for a premium subscription to churn out nothing. When that strategy gets the account to thousands of (essentially worthless) followers the account has some level of value, it’s a ready-made profile for anyone interested in an astroturfing marketing campaign. All built on nothing, of course, and not of any real value. But it’s possible to imagine a gullible and/or unscrupulous person paying for a few thousand ‘followers’.

This is not a problem unique to the AI era. Bots were around in 2016, even if some of the Russia bot talk around that election was overstated. OpenAI et al just make the content a little stronger and the entry point for someone to attempt a scam a little lower. You no longer need some background in programming to make this work.

But we’re talking about the tip of the iceberg here. The accounts with the obvious AI red flags are from actors taking a throw-enough-shit approach. That means thousands of accounts. If some show obvious AI input, who cares? The accounts will either be forgotten, or rebranded. Yes they are making social media a crappy place, but they can’t really move the needle in terms of discourse.

But savvier operators can use custom language models to hone a specific message of hate or outrage. They can add human oversight so we never see the AI red flags. They can work with fewer accounts but make output higher quality to seek human engagement. These are the accounts that can profit from division, whether that’s monetarily or politically.

The post-2016 boom in Trust and Safety teams and social media research funding means there are a lot of eyes on this problem. But at times those eyes are on 2016 problems. Even the astroturfing scam outlined above is sooooo 2016. The AI bots are, so far, not creating a new problem, just rehashing an old one that may no longer be that relevant.

That doesn’t make it less funny/depressing [delete where appropriate] to see these bots flourishing on the platform formerly known as Twitter.

Small bits #1: AI can (maybe) write

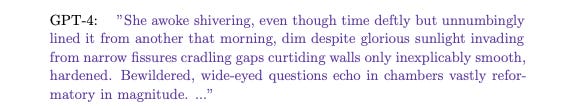

Diving into generative AI this past year has led to a few moments of worry that writing as a skill will become obsolete. This worry is generally eased by asking AI to write literally anything. Still, I thought this study from Murray Shananan and Catherine Clarke would provide empirical proof that the AI cannot craft a decent sentence beyond first drafts on jargon-heavy copy.

The findings were not that reassuring. The full study can be read here, but the tl;dr is that with prompts and critical feedback from an experienced writer GPT-4 can eventually craft something that, while not exceptional, could probably pass as literature.

The AI-generated passages didn’t grab me, but some of that is likely from knowing the source. I was steeled to be disengaged from the outset. This was an interesting line from the conclusion:

’In the hands of a skillful writer who is also adept at prompting, an LLM might be thought of as a tool for playfully investigating regions of their imagination that would otherwise remain unexplored’.

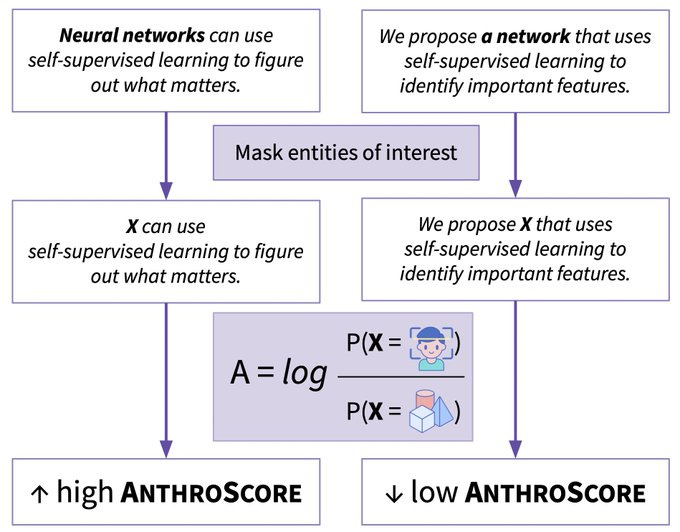

Small bits #2: Don’t anthropomorphize the AI

I try not to write about AI as human-like, it’s not a rule I’m always good at sticking to. When AI goes badly it’s more fun to refer to it in human terms, like dumb or foolish. But AnthroScore from some Standford researchers presents a tool that strips AI descriptions of the language that causes misunderstanding of AI capabilities. One of the researchers, Myra Cheng, outlines how the tool works and why anthropomorphism can damage our understanding of AI tools. The tool should be a must for a first pass at language on AI policy.