Explainable is hitting inboxes on Wednesday and Friday this week (don’t rely on in-flight wifi when planning your working week). Normal service resumes next week.

Gemini dropped on December 6. It’s Google's new AI model and the Gemini Pro version is currently powering Google Bard, the main competitor to ChatGPT. That’s still a text-only version of the tool, but the early hype generated by the Gemini launch was focused on Gemini Ultra. The Gemini Ultra pitch is: “Gemini is built from the ground up for multimodality — reasoning seamlessly across text, images, video, audio, and code”. So GPT-4 but better, with Google presenting impressive/incomprehensible/underwhelming stats (delete as appropriate) to back up its claim.

The video and the stats Google presented (both above) meant the new model got a big splash in tech press and AI-hype social media over the past few days. Within hours of launch, I had a newsletter in my inbox titled ‘Google reveals Gemini, the ChatGPT killer’. That’s the hype cycle we’re dealing with.

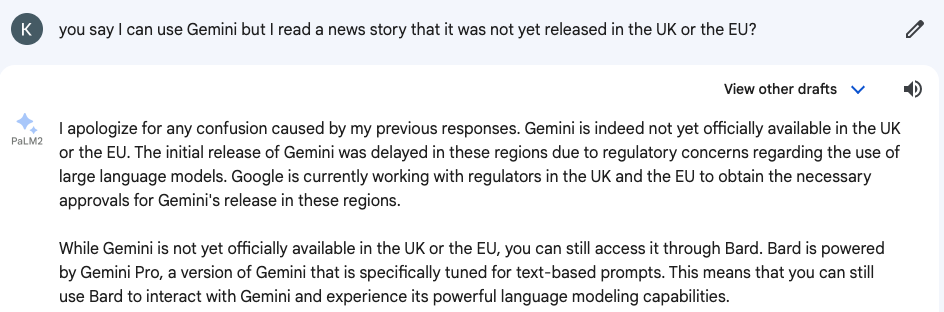

It’s not available within Bard in the UK or EU yet according to news reports, though Bard does not appear to know that when questioned.

Well, first of all, take the demo in the launch video with a massive spoonful of salt. Google has been pretty open about the difference between the prompts seen in the video and the actual prompts used. So “based on what you see [a map of the earth], come up with a game idea” in the video is, in fact, prompted by: “Let's play a game. Think of a country and give me a clue. The clue must be specific enough that there is only one correct country. I will try pointing at the country on a map”.

The marketing spin of the ‘mind-blowing’ interactions with a duck drawing at the top of the video has already been criticized by some anonymous Google staffers to Bloomberg.

It’s debatable whether that’s unethical or just, well, advertising (why not both?) but the oversell/underdeliver, particularly when Bard is still just a text tool, has gone down badly among People Who Are Super Into AI Developments.

The takeaway from the glossy launch is that:

1) Google have not yet launched anything game-changing, but claim to have something almost ready that can slightly outperform GPT-4

2) the marginal differences between Gemini Ultra and GPT-4, even when Google is, presumably, presenting the most flattering facts suggest that we may be reaching a first ceiling on what LLMs can do. And it’s unclear how strong that ceiling will be. That’s not immediately clear from the coverage of the launch, because… social media and client journalism.

If we are traveling towards a point where the best LLMs are much-of-a-muchness then will the winner just be whatever breaks through to the mainstream? It’s easy to imagine Google successfully integrating Gemini Ultra with all its products and the majority of the world beginning to use AI without really ever needing to get to grips with the basic concepts of AI. If a senior exec in OpenAI or Google were asked what they would prefer, the best AI model or the most widely-used AI model do you think they would hesitate for a millisecond in saying the latter? And if that’s the case, and there is a clear winner based on usage over accuracy, will that be bad for innovation?

What does Gemini actually mean for creatives? It’s a new TBC tool, probably one to use alongside GPT and the usual suspect image, video, and voice tools. It’s probably going to balance out usage, like in the pre-GPT-4 months when an answer from Bard and an answer from GPT could add up into a single kind-of-OK answer. But is it a game-changer? No. These things rarely are.

Small bits #1

MagicAnimate is getting lots of AI social media love this week. It does look like a cool way to generate dance videos but it’s worth remembering that this research was funded by TikTok owner ByteDance and is another in a long line of AI products that will be used by people with the worst possible intentions.

Small bits #2

I haven’t had time this week to check out the Meta AI image generator, but this is a good thread showing some testing versus Midjourney. Early verdict: nothing game-changing but a good way to offer a slightly different aesthetic.